The expert testimony to NICE that took apart the case for CBT and graded exercise for ME/CFS

Update: the final guidelines, which apparently said much the same as the initial ones, were due to be published on 18 August, 2021. Unexpectedly, NICE announced on 17 August that it was pulling publication – apparently after coming under pressure from supporters of CBT and graded exercise.

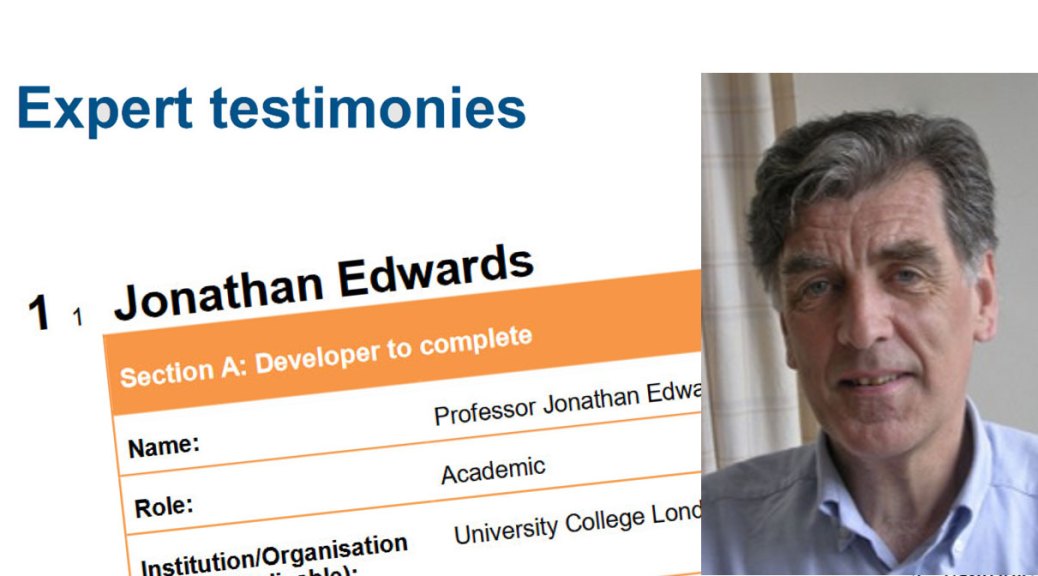

Professor Jonathan Edwards told NICE it should not recommend either CBT or graded exercise as all the trial evidence for them used subjective outcomes in unblinded trials, giving unreliable results. He showed why blinding in ME/CFS trials is essential, despite PACE supporters claiming it is not. Edwards said it was also unethical to tell patients that the treatmens are safe, effective and based on sound theories as there are serious doubt about all three claims. Finally, he argued that quality control in research for these kind of studies is broken and needs fixing.

In 2007, NICE recommended cognitive behavioural therapy (CBT) and graded exercise therapy as the only suitable treatments for ME/CFS. Its new draft guidelines, published in November 2020, say no to all graded exercise. And no to any CBT that assumes ME/CFS is caused by patients’ flawed beliefs or behaviours.

The driving force for this U-turn was the guideline committee’s evidence review, which rated almost all of the evidence for CBT or graded exercise as very low quality. The remainder was rated as low quality.

Probably the main reason for these damning ratings was Professor Jonathan Edwards’ expert testimony to the ME/CFS guidelines committee.

His testimony brilliantly exposed the deep flaws in the evidence for CBT and graded exercise. Effectively, Edwards, a specialist in clinical trial design, hammered a box of nails into the coffins of CBT and graded exercise for ME/CFS.

The central problem: flawed ME/CFS treatment studies tell us nothing useful

Edwards highlighted the central problem, that CBT and graded exercise studies have relied on biased methods that produce unreliable results.

Most clinical trials of drugs are run blinded, which means that patients don’t know if they are getting the active drug or are receiving a dummy pill in the “control” group.

Success is defined not as the improvement in the drug group alone, but whether or not people in the drug group improve by substantially more than those in the placebo group.

Such blinding is usually required in biomedical treatment studies because it is well known that if patients are asked how they feel, their answers can be biased because they expect to feel better or they want to please researchers. This is known as expectation bias and blinding is there to protect against this.

Blinding is much less of an issue when a study uses objective outcomes, such as changes in blood sugar levels or exercise capacity. However, for subjective outcomes, such as patients scoring their own fatigue levels, expectation bias is a major problem.

Edwards told the committee that bias “is an aspect of human nature that affects all types of scientific experiment, whether in the lab or in the clinic. If outcome measures are truly objective blinding is not necessary, and vice versa. For this reason, unblinded trials with subjective outcomes are specifically considered unreliable.”

The PACE trial as well as all the other psychosocial treatment trials in ME/CFS were unblinded, and so problems of bias, said Edwards, “make more or less all the trials to date unsuitable as a basis for treatment recommendation.”

But does blinding really matter for ME/CFS?

Despite the compelling case for bias undermining trials of CBT and graded exercise, the PACE trial authors and their supporters have tried to justify the continued use of flawed methods.

They have argued that placebo effects in ME/CFS are likely to be small and short-lived. More generally, Professor Sir Simon Wessely states in his “how to” guidebook for running clinical trials in psychiatry that blinding is nice – but not necessary. Wessely has even called the unblinded PACE trial “a thing of beauty”.

Edwards pointed to the clear evidence from rituximab studies that blinding is as essential for ME/CFS studies as it is for any other disease.

Following a promising pilot study of rituximab for ME/CFS, the researchers looked to find the most effective dose of the drug to use.

All the patients in the dosing study knew they were on rituximab. There was also no control group, but all the same, the study showed major gains for patients. Things were looking good.

But a subsequent blinded study by the same team showed that rituximab had no real effect. While those getting the drug improved, just as in the dosing study, a similar, high proportion of those getting the placebo also improved. This result underscores the problem of expectation bias and shows why blinding is so important in ME/CFS studies – despite the claims of PACE authors.

“Unblinded trials like PACE provide us with no useful information”

Edwards went on to point out that drug companies spend hundreds of millions of dollars a year to ensure blinding in trials, and they wouldn’t do that if it wasn’t necessary to meet the high standards needed for drug approval. Whatever Wessely might claim, Edwards said, blinding “is necessary in order to get reliable results”.

He concluded that “Unblinded trials like PACE provide us with no useful information because treatment with no specific beneficial action [such as the placebo in the rituximab trial] can give the same result.”

The psychologist Brian Hughes, who has written about the crisis in psychology research, paraphrased Edwards more bluntly: “bad evidence is no evidence at all”.

Edwards argued that there are two reasons why bias is an even bigger issue in trials of psychosocial therapy than in drug trials.

First, patients are told that the treatments work, increasing the level of expectation bias. Second, patients are encouraged to take a positive attitude to problems, playing down symptoms. This is also likely to lead to patients rating their symptoms as less severe than they really are.

The blinding conundrum – no free pass for psychosocial research

Edwards argued throughout his testimony that the high standards required for drug trials should apply equally to psychosocial treatment trials.

Of course, it simply isn’t possible to conceal from a patient that they are getting CBT or graded exercise. That blinding is not possible does not make the problem go away. Instead, says Edwards, it is down to the researchers to solve this problem in order to get reliable results.

He added, “Unreliable results cannot be seen as reliable just because it is difficult to get more reliable ones.”

Edwards pointed out that it is possible to design a system that ensures that key subjective outcomes are supported by objective ones. This has been done in his field of rheumatoid arthritis.

“Unreliable results cannot be seen as reliable just because it is difficult to get more reliable ones.”

Although there was no such system in place in any ME/CFS psychosocial trials, PACE did measure some objective outcomes. The subjective gains in self-reported physical function and fatigue were not supported by corresponding gains in the objective outcomes. This means, said Edwards, that the best available evidence is that CBT and graded exercise do not work.

Unethical to continue using CBT and graded exercise

Edwards pointed to three ethical concerns with using these treatments.

1. Unsupported theories

Both CBT and graded exercise, argued Edwards, appear to be delivered in a framework that assumes patients have unhelpful beliefs about their capacity for exercise. Edwards said that he had seen no evidence that this view was “any more than popular prejudice”.

The theory that exercise is a route to recovery for people with ME/CFS is equally problematic. Maximal exercise tests, said Edwards, provide at least some evidence that patients’ physiological response to exercise is different in ME/CFS.

2. Evidence of harm

Edwards said that from a theoretical perspective, increasing activity is as likely to harm as it is to help.

Which makes it all the stranger that despite being willing to rely on subjective self-reports of improvements, those backing psychosocial treatments have been unwilling to consider literally thousands of self-reports from patients of harm. Edwards cites Tom Kindlon’s paper exposing the widespread reporting of harms by patients.

Edwards said, “There are good reasons to think psychosocial therapy and exercise therapy can do harm, particularly if they involve misrepresentation of knowledge about an illness.”

3. Claims the treatments work

As Edwards chronicled in his evidence, the treatments don’t work.

Given the problems with the claims made for CBT and graded exercise, Edwards said, it is unethical to tell patients the treatments are based on sound theory, unethical to say they are safe and unethical to claim they work.

Quality control: the “broken field” and the unsayable criticism

That CBT and graded exercise continue to be used and recommended despite the evidence shows, said Edwards, that quality control has broken down.

It became clear then that the existing approach of using unblinded studies with subjective outcomes couldn’t produce useful results. So Edwards was surprised to discover years later that researchers of psychosocial therapies for ME/CFS were still pursuing such a flawed approach.

“the disciplines involved need to take a long hard look at themselves.”

Research quality is supposed to be policed by peer review, where fellow researchers check the standards of studies submitted for funding or publication.

This requires that the peer reviewers understand the problems and reject work that is flawed. It appears that they don’t, as Edwards illustrated with three examples.

1. The most egregious issue with the PACE trial was the authors moving the goalposts for what counted as recovery. They abandoned their original, published definition, replacing it with a much weaker one after they had seen the study’s results.

The authors justified the change by saying that the revised, much more positive results were more consistent with previous studies and their clinical experience. Edwards pointed out that the authors “do not seem to realise that outcome measures need to be predefined in order to avoid exactly this sort of interference from expectation bias”.

2. Cochrane reviews systematically evaluate the evidence on a particular topic. These reviews are generally highly regarded but Edwards highlighted problems with Cochrane work on ME/CFS.

The most recent Cochrane review of CBT for ME/CFS comes to a guarded conclusion about short-term benefit and emphasises the need for more studies. Edwards points to problems that suggest that even these conclusions are overoptimistic.

A more recent review on graded exercise, which concluded it was a good thing, has been heavily criticised. Even Cochrane itself has now expressed reservations about the review.

Both reviews failed to address the problem of bias in unblinded studies. And Edwards highlighted that both reviews were authored by junior colleagues of a PACE trial researcher. This is not the independence that is needed.

3. Edwards discovered first-hand problems with peer review in the field when he submitted a paper that mentioned the problem with subjective outcomes in unblinded trials. Peer reviewers wanted him to drop that point, not because the critique was wrong, “but because it would cast doubt on almost all treatment studies in clinical psychology”.

“Something is badly wrong”, said Edwards, “the disciplines involved need to take a long hard look at themselves.”

Why CBT and graded exercise have to go

Edwards concluded by telling the NICE guidelines committee he believed NICE should not recommend either CBT or graded exercise for ME/CFS. He said this applies to both adults and children. The evidence for the therapies is unreliable because of bias and there are significant ethical problems with their continued use.

Professor Jonathan Edwards’ expert testimony is a brilliant and brutal demolition of the case for CBT and graded exercise as treatments for ME/CFS. While he drew on the evidence of many others, his testimony is perhaps the most impressive single critique of flaws in the evidence used to justify these treatments. It deserves to get a wider audience.

My blog focuses on what seem to me to be the most important points of Edwards’ testimony. You can read his full submission [PDF], starting on Page 5.

Researchers whose work pointing out flaws in ME/CFS research was referenced by Professor Edwards

Lead authors: Tom Kindlon, Dr Carolyn Wilshire, Mark Vink, Alexandra Vink-Niese, Dr Keith Geraghty, Professor Brian Hughes. Co-authors: Robert Courtney (RIP), Alem Matthees, David Tuller, Professor Bruce Levin. Links are to work referenced by Edwards but not included in my blog.

4 thoughts on “The expert testimony to NICE that took apart the case for CBT and graded exercise for ME/CFS”

Surely under ‘What is ok and not ok in clinical trials’ the line with the cross beside it should say ‘Unblinded trials with subjective outcomes’? It currently says ‘blinded trials’ and reads the same as one of the options two lines higher up with a tick beside it.

Thanks for spotting. I’ve got a migraine right now but will fix ASAP

Thankyou to Prof Jonathan Edwards, for a robust, germane and fair criticism of flawed methods in published papers purporting to study CBT and GET interventions in ME CFS treatment.

Additional commentary on the field also warranted and helpful.

Thanks are also due to Dr David Tuller for his work exposing the flaws in these publications, also to Simon McGrath for publishing these facts.

Its very heartening to see sense beginning to prevail. Long may it continue.

Great summary! You should add recruitment bias mentioned by Edwards, the fact patients who had already negative experience with exertion may have desisted from registering for a GET intervention study. (Which in my opinion could also mean that ME/CFS patients more self-assured about their selfcare needs may have desisted from registering, while exactly the more suggestible patients may have registered…).

Based on viewing the Tom Kindlon article link, two further methodological issues would be:

Use of older, broader Oxford CFS criteria including about 15% of participants that did not report post-exertional malaise (and would thus probably not be considered ME/CFS patients according to newer criteria) – not sure how this factored into the results of the trial,

and the bar for reporting harm seems to have been set high (e.g.: “death”, “deterioration…of at least 4-week continuous duration”; “…at two consecutive assement interviews”), so to me it looks like short term post-exertional malaise and key post-exertional features of CFS (und thus a lot of suffering) may not have been captured under this methodology of reporting harm. If my understanding is correct, they may have measured whether the patient left the trial permanently worse, but not how much the patient suffered due to temporary post-exertional malaise?

Also, for CFS patients who are not severe, their state can vary greatly over the course of time due to factors independent of the PACE trial (for example just where in your push&crash cycles you happen to be i.e. whether you’ve just recovered back to your baseline, or crashed again; circumstantial factors influencing the amout of strain or stress relief outside the trial etc.) I am not familiar enough with the trial to know whether they tried to correct for such factors, but these would certainly be relevant for whether an effect could be attributed to the trial intervention (i.e. the question whether these trials have been so-called controlled experiments).

Comments are closed.